So while in Costa Rica for a surf trip, I thought it would be a fun project to run a little cryptocurrency mining experiment. I have been playing around with a few Raspberry Pis for a while now, and out of curiosity decided to set them up as cryptocurrency miners. Anyone who has been following the Bitcoin (BTC) craze as of late, and has a rough idea of how the mining process works, would know that using a Raspberry Pi as a Bitcoin miner is like bringing a knife to a gun fight (actually it is more like bringing a toothpick to a gun fight). But considering I was going to be out of the country for two weeks with limited internet, I figured I might as well put the Raspberry Pis to some use. After doing a little research, I decided to simultaneously run two Raspberry Pis as cryptocurrency miners, one mining Bitcoin, the other Litecoin, and after a few weeks of run time, evaluate and compare the rate of return on the two mining operations.

For this post, I am going to provide a quick background on the two coins and what this experiment will demonstrate. I will write a separate follow up post with the results later. There has been plenty of press on Bitcoin in the last month, so I won’t go into too much detail about what Bitcoin is, but for those who are not aware, there are also a number of alternative currencies in existence. One of those currencies is called Litecoin (LTC). The advocates of Litecoin advertise the project as ‘the silver to Bitcoin’s gold’. Litecoin is one of a handful of alternative coins that has gained enough traction to get off the ground and it appears to be the most adopted of the alternative currencies. The code for Litecoin was based on the Bitcoin protocol, but modified in a few ways to compensate for some of Bitcoin’s perceived shortcomings.

The primary difference between Bitcoin and Litecoin is the hashing algorithm used in the ‘proof of work‘ phase. Bitcoin and Litecoin mining are done by solving complex problems called proof of work. These proof of work problems are designed to require a fair amount of computing power to solve, however they are easily verifiable, so once a solution is found, it is easy for the network to verify that the solution is valid. This is done by requiring miners to randomly seed a hashing algorithm until they get a certain output. Once the appropriate seed value is found, the solution can be verified by others by simply using the solution seed and running it through the hashing algorithm to check that you get the appropriate output.

For those that aren’t familiar with hashing algorithms, a good analogy to this process is brute-force password cracking (i.e. randomly trying to guess someone’s password). In order to guess someone’s password, you would have to try thousands upon thousands of iterations, but once the correct password is found, it is very easy for someone else to verify that you have found it. The time it takes to find the correct password is fairly random, i.e. it is possible that you could guess it correctly on the first attempt, or it could take you millions of attempts. Adding computing power to the problem will speed up the number of attempts you can make, but it does not guarantee that any individual attempt is more likely to succeed.

With Bitcoin and Litecoin mining, individual miners compete against each other to find the solution to the proof of work problem. The first node that correctly finds the solution is rewarded with a number of coins. Adding computational power to you miners increases the odds that you will find the solution first, but because of the randomness involved in the process, it does not guarantee that you will be the first to solve a particular proof of work. As of late, Bicoin has faced something of an arms race. Bitcoin uses the SHA256 hashing function in its proof of work. This algorithm is highly parallelizable, which roughly means that throwing more computational power at the algorithm will result in faster iterations. Graphical Processing Units (GPU’s) are very well suited to the task, and as such they quickly displaced CPU’s as the preferred Bitcoin mining hardware. More recently, Field Programmable Gate Arrays (FPGAs) and Application Specific Integrated Circuits (ASICs) have taken over the mining scene. FPGAs are programmable chips and ASICs are specially manufactured chips. FPGA’s started coming online a year or so ago and displaced GPU’s. ASICs are starting to appear in mining rigs and will eventually displace FPGA’s. This arms race has largely left the average person standing on the sidelines, as profitable mining rigs now require fairly significant investments to obtain, and your average desktop computer, even if you use the GPU, is fairly useless as a miner, and in fact would probably result in a negative return on your investment after factoring in energy costs.

Litecoin was created in response to this arms race. The designers of Litecoin were upset that Bitcoin mining had left the average user in the dust and felt that a cryptocurrency that was designed with a more level playing field would encourage wider adoption. As such the designers of Litecoin forked the Bitcoin source code and modified the hashing algorithm used in the proof of work. Instead of using the SHA256 function, they changed the code to use the scrypt hash function. The reason, in short, for this change was that the scrypt algorithm is more memory intensive, and as such it is not as parallelizable as SHA256. Therefore, simply adding more raw computational power, in theory, would not have as large of an impact as it would with the SHA256 algorithm. This means that the gap between the hashing power of CPU’s, GPU’s, FPGA’s and ASIC’s is much narrower, thus giving the average person a better chance at staying in the game.

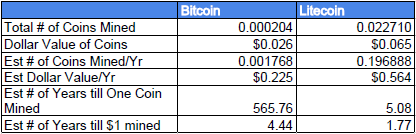

Considering this, I thought it would be fun to see how my Raspberry Pis would fare as miners for these two different currencies. In theory, I should be able to mine much more Litecoin than Bitcoin, as the playing field is a little more level, and due to the lower popularity, there are fewer miners resulting in a lower overall network hash rate (its still like bringing a toothpick to a gunfight, but the guns are limited to single shot rifles, rather than semi-automatics, and there are fewer attendees overall!). Of course, an additional factor that needs to be considered is the market price for each of the coins, as Bitcoin has been trading at over $100 whereas Litecoin has been trading for less than $5. So while I will most likely mine more Litecoin than Bictoin, it is not yet clear which mining operation will result in the most ‘profit’.

In order to facilitate this experiment, it was necessary to sign up for mining pools for each of the currencies. Mining pools are a way to aggregate computing resources in order to cooperatively mine the coins. The major reason for doing this is because for most individuals, it is nearly impossible to successfully complete a proof of work (once again, the mining process is essentially a competition, where the first node to present a valid solution wins). By joining a mining pool, you ensure that you will receive some form of payout, as the combined resources of the pool should be enough to successfully complete some proof of work, and when it does, the rewards are divided among the pool participants based upon the amount of hashing power they contributed. For Bitcoin, I chose to use deepbit.net, and for Litecoin I chose to use notroll.in. I am not endorsing either of these pools as I have not had much experience with either of them (anyone that is seriously considering mining coins should do some research into the pool they use, as there has been numerous reports of shady operators).

So the miners have been running for almost three weeks at this point. I am going to tally up the numbers and post some results and analysis shortly.